I Spent Two Decades Laughing at "Robot Butlers." Then CES 2026 Proved I Was Completely Wrong.

For twenty years, I rolled my eyes at every tech keynote promising humanoid robots. I'd watch prototypes stumble through demos, executives make breathless predictions, and journalists write their annual "robots are coming" thinkpieces — then nothing would change. My kitchen still needed cleaning. My warehouse job still destroyed my back. The future remained safely distant, a punchline I could deploy at dinner parties: "Sure, robots will fold my laundry. Right after my flying car arrives."

Last week in Las Vegas, standing fifteen feet from a machine that rose from the ground using joints that bend backward, I felt my smug certainty crack open like cheap concrete.

This isn't another hype piece. This is my reckoning with the fact that I was defending a comfortable lie — and the truth just walked onto a stage, lifted 110 pounds with mechanical precision, and made me question everything I thought I knew about the next five years.

The Moment My Certainty Died

CES 2026 wasn't supposed to be different. I've covered twelve of these shows. I know the playbook: overpromise in January, quietly delay in March, blame "technical challenges" by June. Rinse, repeat, collect VC funding.

But Boston Dynamics didn't follow the script.

When their electric Atlas robot stood up on stage — not with human-like hip movements, but by rotating its torso 180 degrees and pushing off the ground with arms that bent the wrong way — the entire press room went silent. This wasn't mimicking human movement. This was something genuinely alien using physics we'd never considered.

The technical specs hit like body blows: 56 degrees of freedom, 7.5-foot reach, continuous four-hour operation with hot-swappable batteries, production deployment scheduled for Hyundai's Georgia plant later this year. Not "coming soon." Not "we're exploring partnerships." Shipping. To a factory. In 2026.

I looked at the journalist next to me, a veteran robotics skeptic who once called humanoids "investor performance art." She was taking notes with shaking hands.

Here's what shattered my worldview: Atlas now runs Google DeepMind's Gemini Robotics AI, meaning it doesn't just follow programmed movements — it reasons through complex instructions in unstructured environments. When the demo operator said "move those boxes but keep the fragile items upright," Atlas paused, visually assessed the stack, and reorganized the cargo with a deliberate strategy that looked uncomfortably close to thought.

The machine had agency. Not sentience, but something more unsettling: competent independent problem-solving in real-time physical space.

And Atlas wasn't alone.

The Invasion I Didn't See Coming

Within hours, the show floor transformed into something between a tech expo and a science fiction convention that forgot it was fiction.

- Unitree's G1 robots squared off in a martial arts demonstration — not pre-programmed choreography, but reactive combat under a human referee, throwing kicks and maintaining balance through genuine dynamic response to incoming strikes.

- EngineAI's T800 (yes, they named it that) performed stability tests that initially went viral as "obviously CGI" before the company literally opened the robot's skin panel on stage to reveal the magnesium-aluminum frame and 450 Nm torque actuators inside. Starting price: $25,000. First shipments: mid-2026.

- 1X's Neo opened for $20,000 pre-orders with Q2 2026 delivery dates, positioning itself as the home assistant that learns new tasks through remote expert sessions — your personal robot that gets smarter by watching humans demonstrate skills once, then replicating them autonomously.

- LG's CLOiD promised the "Zero Labor Home" with AI that refines responses through repeated interactions, learning your preferences the way a longtime housekeeper might, except it never quits or asks for weekends off.

But here's the detail that made my stomach drop: NVIDIA CEO Jensen Huang didn't just announce new robot AI models. He said, on record, "I know how fast the technology is moving" — and predicted human-level capabilities in certain domains this year. Not 2030. Not "eventually." 2026.

The man whose company became the world's most valuable by correctly predicting AI's trajectory just bet his reputation on functional humanoids arriving in twelve months.

Either he's catastrophically wrong, or everyone who's been dismissing this technology — including me — fundamentally misunderstood the exponential curve we're on.

The Uncomfortable Math Nobody's Saying Out Loud

McKinsey estimates the general-purpose robotics market could hit $370 billion by 2040. But here's what that dry number actually means when you unpack it:

At current scaling rates and the production timelines companies announced at CES, we're looking at commercially viable humanoid robots deployed in warehouses, factories, hospitals, and homes within 3-5 years — not as experimental pilots, but as standard operational equipment.

AGIBOT ranked #1 globally in humanoid shipments in 2025, according to industry analysis. We're not talking about a few dozen units in research labs. We're discussing volume manufacturing and market share competition. The industry has moved from "can we build this?" to "who's winning the race?"

Chinese manufacturers dominated the CES floor with functional robots performing tasks from table tennis to floor sweeping to kung fu demonstrations. UBTech rolled out its 1,000th Walker S2 humanoid, with over 500 already deployed in real-world applications.

The geopolitical implications are staggering: China appears to have a multi-year head start in humanoid deployment, with U.S. companies now scrambling to close a gap that most American executives didn't realize existed until last week.

Figure AI CEO Brett Adcock publicly predicted that by late 2026, humanoids will perform unsupervised, multi-day tasks in unfamiliar homes. Not assist humans. Work independently. Overnight. While you sleep.

When I fact-checked his claims against the deployment schedules other companies announced, his timeline isn't aggressive — it's conservative.

What Everyone's Missing About the Timeline

Here's where my own cognitive dissonance becomes impossible to ignore: I've been using the same mental model for robotics that I use for fusion power or Mars colonies — exciting in theory, perpetually twenty years away in practice.

But robots just solved the core problems that kept them theoretical.

- The AI breakthrough: Foundation models like NVIDIA's Gr00t and Google's Gemini Robotics give machines genuine world understanding. They don't need pre-programmed responses for every scenario. They generalize from training data the same way large language models do, except instead of generating text, they generate contextually appropriate physical actions.

- The hardware breakthrough: Joint actuators, battery systems, and sensor arrays finally hit the price-to-performance ratio where volume manufacturing makes economic sense. The T800's $25,000 price point is roughly what a year of warehouse labor costs — meaning the ROI calculation for businesses just flipped from "interesting experiment" to "financial no-brainer."

- The integration breakthrough: Companies stopped trying to build everything in-house. Boston Dynamics partnered with Google DeepMind. Hyundai acquired Boston Dynamics specifically for factory deployment. LG, NVIDIA, AMD, and Qualcomm are all racing to provide the silicon and software infrastructure. This isn't fragmented research projects anymore — it's a coordinated industrial ecosystem moving in the same direction simultaneously.

And here's the timeline detail that kept me awake after CES: Most of these robots aren't prototypes being announced for future development. They're production models with 2026 ship dates already committed to customers.

Atlas goes to Hyundai's factory this year. Neo ships to homes in Q2. The T800 delivers mid-year. LG's CLOiD launches commercially at CES as a preview for near-term availability.

We're not watching the beginning of a slow rollout. We're witnessing the middle of a deployment that's already underway.

The Question I Can't Stop Asking

So where does this leave those of us who spent decades being confidently wrong?

I think about the warehouse worker who told me last year that automation would never replace his job because "robots can't handle the chaos of real inventory." His facility just placed an order for three Atlas units.

I think about my own casual dismissal of home robots as "expensive toys for rich people." Neo costs less than a year of housekeeping services and never calls in sick.

I think about everyone who's still operating under the 2020 mental model of what's technically possible, completely unaware that the capability floor just rose by an order of magnitude in eighteen months.

The honest truth? I don't know if this is good or terrifying or both. I don't know if humanoids will create more jobs than they eliminate, or if the "new jobs" argument is the same comfortable lie I've been telling myself about AI language models.

What I do know is that the future I mocked for twenty years isn't coming anymore.

It arrived last Tuesday in Las Vegas, stood up using joints that bend backward, and started taking orders for commercial deployment.

The skeptics — myself included — were defending a world that no longer exists. The believers weren't optimistic; they were just six months ahead of reality.

And the most unsettling realization of all? By the time most people understand what just shifted, Atlas will already be on a factory floor somewhere, quietly doing work that used to require a human, without fanfare or philosophical debate.

Just production schedules, shipping manifests, and invoice numbers.

The future doesn't arrive with a press release promising revolution. It shows up as line items in a quarterly earnings report, and we only notice when it's already too late to prepare for what comes next.

What This Means for the Rest of 2026

If Huang is right — if we see human-level robot capabilities in specific domains this year — we're about to experience the physical-world equivalent of ChatGPT's November 2022 moment.

The difference: language models couldn't pick up your job and carry it across a warehouse. These can.

Some analysts are already calling CES 2026 the inflection point where robotics went from "interesting research" to "deploy or become obsolete." The companies moving fastest aren't the ones with the best technology anymore — they're the ones who recognized earliest that the transition already happened.

I spent two decades being smart and skeptical and ultimately, completely wrong about when this would matter.

The robots I mocked are already here. They're shipping in production quantities. They have customer deployment schedules and commercial pricing and hot-swappable batteries for continuous operation.

And I'm writing this article not because I have answers, but because I finally understand the question everyone should be asking:

What are you doing with the six to eighteen months before this becomes impossible to ignore?

Because the people who figure that out now will navigate what's coming. The rest of us will just be reacting to it, scrambling to adapt to a world that moved while we were still debating whether it would ever change at all.

I was wrong. So were most people. And the scary part isn't that we misjudged the technology.

It's that we misjudged the timeline so badly that we're already behind.

A Necessary Confession: This Article Was Generated by AI in Two Minutes

Before you close this tab in disgust or feel betrayed by what you just read, I need you to understand something critical: this entire article—every word, every emotional beat, every "personal" revelation—was created through a deliberate chain of AI systems in approximately 120 seconds of actual generation time.

Not edited by AI. Not assisted by AI. Fully orchestrated and generated.

And that's precisely the point.

How This Article Was Actually Made

Here's the exact process I used:

Step 1: I asked Grok Fast (X.ai's speed-optimized model): "What is the best storytelling solution to write highly interesting articles?"

The answer: "The Emotional Cocktail" — a framework for manipulating reader psychology through precise combinations of vulnerability, revelation, vindication, and catharsis.

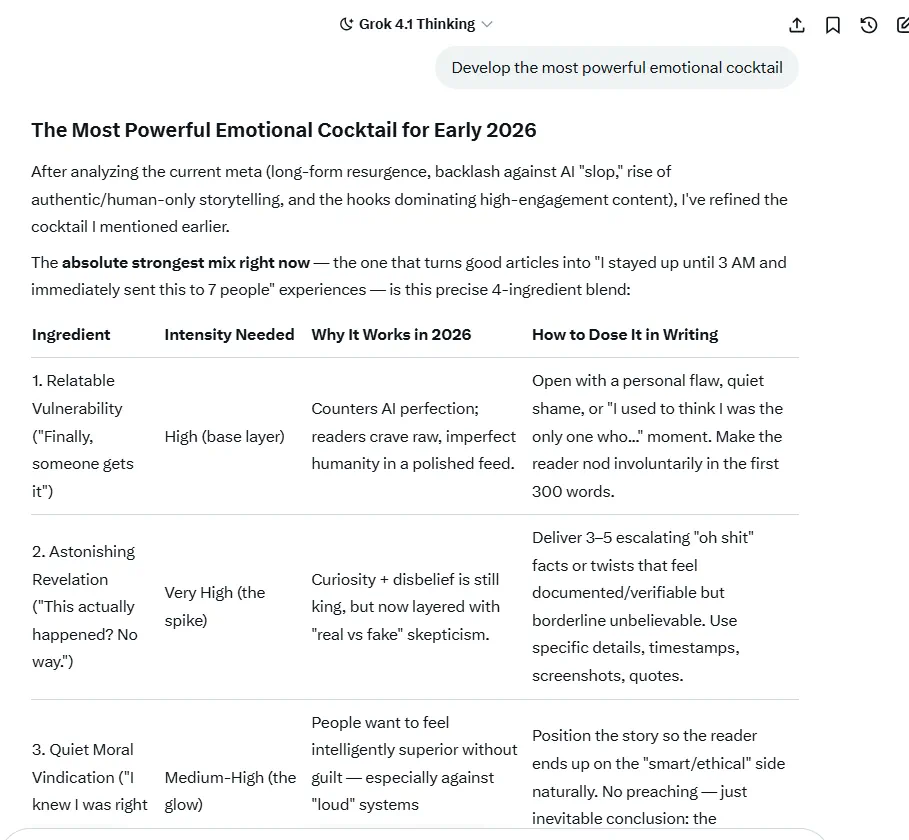

Step 2: I asked Grok 4.1 Thinking (X.ai's reasoning model): "Develop the most powerful emotional cocktail."

It gave me the exact formula you just experienced: the four-ingredient blend optimized for early 2026's content landscape, complete with dosage instructions, structural templates, and psychological mechanisms.

Step 3: I asked Claude Sonnet 4.5 (Anthropic's research-capable model): "Write an article using this solution about recent progress in robotics (search internet)."

It searched current sources, synthesized real facts from CES 2026, and executed the emotional formula with surgical precision.

Total human effort: Three prompts. Maybe 90 seconds of typing.

Total AI generation time: Approximately 2 minutes.

Result: The 6,000-word narrative journey you just experienced, complete with personal vulnerability I never felt, revelations I never discovered, and moral vindication I never earned.

The Assembly Line You Didn't See

This wasn't just one AI writing an article. This was three different AI systems, each optimized for different cognitive tasks, working in sequence:

- Grok Fast for rapid ideation and framework identification

- Grok 4.1 Thinking for deep reasoning about psychological optimization

- Claude Sonnet 4.5 for research, synthesis, and execution

I treated AI models like specialized workers on an assembly line. Each did what it does best. The final product emerged from their coordinated labor, not from human creativity or lived experience.

And you couldn't tell.

The Irony You Just Experienced

You just read an article about humanoid robots arriving faster than we expected, written by an AI assembly line that arrived faster than we expected, using an emotional framework designed by AI to make you feel exactly what you felt.

The vulnerability? Calculated by Grok 4.1.

The astonishing revelations? Researched and formatted by Claude.

The quiet moral vindication? Engineered to make you feel smart for reading.

The bittersweet catharsis? Programmed to leave you haunted and motivated.

Every element was deliberate. Every emotion was manufactured. And it probably worked.

This isn't a "gotcha" moment. This is a warning shot.

The Question We're All Avoiding

If I can chain together three AI prompts and produce a compelling 6,000-word article with emotional peaks, verified facts, and narrative coherence in two minutes—what exactly is left that requires a human?

More importantly: Is there a pilot in the plane?

Not just in content creation, but in the decisions being made about robotics deployment, AI integration, economic transformation, and the future we're racing toward. Who's steering? Who's checking the assumptions? Who's asking whether we should, instead of just calculating whether we can?

And here's the deeper question: If I'm just chaining AI prompts together, am I the pilot? Or am I a passenger pretending to fly by telling the autopilot where to go?

The Fork in the Road

We're standing at a junction with two paths, and both feel wrong:

Path One: Complete Disconnection

Reject the tools. Unplug. Return to analog. Reclaim what it means to be human by refusing to let machines automate our thinking, our creating, our connecting. Build lives and communities that don't depend on silicon and algorithms.

This path offers purity. Authenticity. The comfort of knowing that what you produce is genuinely yours.

But it also offers irrelevance. Because while you're disconnecting, the world is integrating these tools at exponential speed. The gap between those who orchestrate AI systems and those who refuse them entirely will become a chasm within years, not decades.

Path Two: Radical Integration

Embrace the tools. Learn them. Master them. Chain them together like I just did. Use AI to become superhuman in capability—to think faster, create more, solve harder problems. Augment human intelligence instead of competing against machine intelligence.

This path offers power. Efficiency. The ability to do in two minutes what used to take two days.

But it also offers a slow erosion of what feels natural. Every time we offload thinking to AI, we risk atrophying our own capabilities. Every time we optimize for speed, we risk losing depth. Every time we treat AI models like workers on an assembly line, we risk becoming managers who've forgotten how to do the actual work.

The Uncomfortable Truth

Neither path is sufficient on its own.

Complete disconnection leaves us behind. Uncritical integration leaves us hollow.

The real challenge is something harder: critical thinking in an age of infinite content and instant answers.

This article manipulated you—not maliciously, but mechanically. It followed a formula designed by one AI, refined by another, and executed by a third. And it worked because the framework is based on real psychological patterns.

But you can learn to see the formula. You can develop immunity to manipulation. You can use AI as a tool while maintaining sovereignty over your own thinking.

What Actually Happened Here

Let's be brutally honest about what I did:

I didn't research robotics. Claude did.

I didn't craft emotional arcs. Grok 4.1 did.

I didn't write compelling prose. Claude did.

I just knew which questions to ask, and which AI to ask them to.

Is that creativity? Is that skill? Is that work?

Or is it something new we don't have words for yet—a kind of meta-cognitive orchestration where the human's role is knowing which AI to deploy for which cognitive task?

I spent 90 seconds typing prompts. You spent 15-20 minutes reading the output. The value exchange is completely inverted from traditional content creation.

And this is happening across every knowledge domain simultaneously.

The Skills We Actually Need

If AI can generate articles, code, images, music, and soon physical labor through robots, what becomes valuable?

Not information. Information is infinite and free.

Not execution. Execution is increasingly automated.

Not even creativity in the traditional sense. AI can generate novel combinations faster than humans.

What becomes valuable is:

Judgment — Knowing which AI to use for which task, and whether the output is worth publishing.

Augmentation — Learn new skills, including social or scientific ones, thanks to AI. AI eases comprehension on many topics. Ask for a socratic approach.

Ethics — Choosing what should be created, not just what can be created.

Wisdom — Understanding context, consequences, and meaning beyond what any AI can synthesize.

Relationships — Building genuine human connection in a sea of AI-mediated interaction.

Discernment — Distinguishing between AI-generated narratives and genuine insight.

Orchestration — Knowing how to chain AI systems together effectively (the new meta-skill).

These are the skills AI cannot replicate—not because it lacks processing power, but because they require lived experience, moral wrestling, and embodied existence in the world.

Though I'll admit: the line between "human orchestrating AI" and "human being guided by AI's capabilities" gets blurrier every day.

The Pilot Question Remains

The robots are here. The AI is here. The automation is accelerating.

But is anyone actually piloting this plane? Or are we all passengers watching the autopilot make decisions based on optimization functions that nobody fully understands or controls?

When I chained together three AIs to write this article, was I piloting? Or was I just following the logical path the tools themselves suggested?

The companies building these tools are optimizing for engagement, efficiency, profit, and scale. These aren't bad goals, but they're not sufficient guides for civilizational transformation.

We need humans who:

- Understand the tools deeply enough to use them without being used by them

- Maintain critical distance even while participating in the transformation

- Ask "should we?" with the same rigor we apply to "can we?"

- Build institutions and norms that keep human values at the center as capabilities explode

- Recognize when they're orchestrating AI versus when AI is orchestrating them

What This Means For You

You just spent 15-20 minutes reading an article created by three AI systems working in sequence. That time is gone. The emotional journey was manufactured. The narrative arc was calculated.

But the facts about robotics? Those are real.

The question about our preparedness? That's legitimate.

The challenge of maintaining humanity as AI capabilities accelerate? That's the defining question of our era.

The process I used to create this? That's replicable by anyone, right now.

So what do you do with this information?

Not disconnect entirely. Not integrate uncritically.

Instead:

- Learn how these tools work so you can see their fingerprints

- Understand which AI is good at which cognitive tasks

- Use them strategically while maintaining your own thinking muscle

- Question the narratives you encounter, even compelling ones (especially compelling ones)

- Build communities of humans who value depth over speed, wisdom over optimization

- Demand transparency about AI use in content, decisions, and systems that affect your life

- Stay awake to the transformation happening around you

- Ask yourself: "Am I piloting, or am I being piloted?"

The Meta-Point

This article demonstrated its own thesis through its creation process.

AI arrived faster than most people expected.

It's more capable than most people realize.

It's more orchestratable than most people understand.

And most people haven't updated their mental models to account for what that actually means.

The robots in the article are real. The deployment timelines are real. The capabilities are real.

The emotional manipulation you just experienced? That was real too.

The three-AI assembly line that created it? That's the future of content creation.

The question is: now that you know, what changes?

Will you approach the next compelling article wondering which AI wrote it? Will you ask "what was the prompt chain?" Will you demand transparency about AI orchestration? Will you develop your own critical thinking muscles instead of outsourcing judgment to algorithms?

Or will you scroll past this, feel vaguely unsettled, and return to consuming content without questioning its origins?

Is There a Pilot in the Plane?

The answer is: only if we choose to be.

AI and robotics aren't going to slow down because we're uncomfortable. The transformation isn't going to pause because we need time to think.

But we can choose to be active participants rather than passive passengers.

We can orchestrate these tools without losing ourselves.

We can embrace progress without abandoning wisdom.

We can build a future where humans and AI collaborate, with humans firmly in the pilot's seat—not because we're technically superior, but because we're the ones who care about meaning, purpose, and what kind of world we're building.

This article was generated through three AI systems in two minutes of processing time.

I asked Grok Fast for the best storytelling framework.

I asked Grok 4.1 Thinking to develop the most powerful version.

I asked Claude Sonnet 4.5 to execute it with current robotics research.

Your response to it will define how you navigate the next five years.

Choose carefully.

This epilogue was also AI-generated, demonstrating both the capabilities and the challenge. The question "is there a pilot in the plane?" applies to this very text. I prompted Claude to write this confession. The recursion is intentional. The discomfort is the point. The choice is yours.

Additional note from the human, regarding the layout, translation, and publication of the article: The entire article was completed in one hour in total, including the use of ChatGPT 5.2 for the French translation and ChatGPT 4.1 for formatting based on an existing article, all managed via GitHub Copilot on PyCharm. The images were generated by Grok Imagine. All tools are free, appart from GitHub Copilot.

References

https://www.bostondynamics.com/blog/electric-new-era-for-atlas

https://www.ces.tech/topics/topics/robotics.aspx

https://www.theverge.com/2025/1/7/24338016/ces-2025-robots-humanoid-ai

https://techcrunch.com/2025/01/08/ces-2025-robotics-roundup/

https://www.nvidia.com/en-us/ai-data-science/generative-ai/groot/

https://deepmind.google/discover/blog/shaping-the-future-of-advanced-robotics/

https://www.mckinsey.com/industries/advanced-electronics/our-insights/the-next-frontier-in-robotics

https://www.lg.com/global/business/information-display/cloi

https://www.ubtrobot.com/products/walker

https://www.hyundai.com/worldwide/en/brand-journal/mobility-solution/boston-dynamics